我正在尝试构建一个自定义脚本、用于将 ONNX 模型编译到 TIDL 工件中、以便可以将其集成到我的 DL 流水线中。

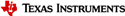

实现这一目标的第一步应该是简单直接的、只需从 onnxrt_ep.py (从 edgeai-tidl-tools repo 复制并粘贴关键部分)。 然而、当我尝试使用与 onnxrt_ep.py 相同的配置和校准图像来编译一个示例模型(deeplabv3light_mobilenetv2.onnx)时、使用伪影对图像的推理与使用示例脚本的输出有太大不同:

关于我的环境:

- 基于 ubuntu18在 Docker 容器中配置环境

- edgeai-tidl-tools 安装在/home/root/edgeai-tidl-tools 上

- 将存储库签出为标签08_05_00_11

- rhe 脚本~/edgeai-tidl-tools/examples/osrt_python/ort/onnxrt_ep.py 成功地运行在我的自定义脚本执行前,因此 .onnx 文件 下载和已经优化通过

tidlOnnxModelOptimizes()

- 在运行这两个脚本之前设置这些环境变量:export DEVICE=J7 && export SOC=am68pa

- 我的自定义脚本活在~/ edgeai-tidl-tools dierctory 中

下面是我构建的脚本:

import onnxruntime as rt

import onnx

import numpy as np

import shutil

from pathlib import Path

import os

from PIL import Image

os.environ["TIDL_RT_PERFSTATS"] = "1"

so = rt.SessionOptions()

input_model_path = Path('/home/root/edgeai-tidl-tools/models/public/deeplabv3lite_mobilenetv2.onnx')

output_dir = Path('./convertedModel/')

artifacts_dir = output_dir / 'artifacts'

shutil.rmtree(str(output_dir))

artifacts_dir.mkdir(parents=True, exist_ok=True)

active_model_path = output_dir / input_model_path.name

required_options = {

"tidl_tools_path": '/home/root/edgeai-tidl-tools/tidl_tools',

"artifacts_folder": str(artifacts_dir)

}

optional_options = {

"platform":"J7",

"version":"7.2",

"tensor_bits":8,

"debug_level":1,

"max_num_subgraphs":16,

"deny_list":"", #"MaxPool"

"deny_list:layer_type":"",

"deny_list:layer_name":"",

"model_type":"",#OD

"accuracy_level":1,

"advanced_options:calibration_frames": 2,

"advanced_options:calibration_iterations": 5,

"advanced_options:output_feature_16bit_names_list" : "",

"advanced_options:params_16bit_names_list" : "",

"advanced_options:mixed_precision_factor" : -1,

"advanced_options:quantization_scale_type": 0,

"advanced_options:high_resolution_optimization": 0,

"advanced_options:pre_batchnorm_fold" : 1,

"ti_internal_nc_flag" : 1601,

"advanced_options:activation_clipping" : 1,

"advanced_options:weight_clipping" : 1,

"advanced_options:bias_calibration" : 1,

"advanced_options:add_data_convert_ops" : 0,

"advanced_options:channel_wise_quantization" : 0

}

delegate_options = {}

delegate_options.update(required_options)

delegate_options.update(optional_options)

# Model compilation

onnx.shape_inference.infer_shapes_path(str(input_model_path), str(active_model_path))

EP_list = ['TIDLCompilationProvider','CPUExecutionProvider']

compilation_session = rt.InferenceSession(str(active_model_path) ,providers=EP_list, provider_options=[delegate_options, {}], sess_options=so)

path_calibation_images = [

'/home/root/edgeai-tidl-tools/test_data/airshow.jpg',

'/home/root/edgeai-tidl-tools/test_data/ADE_val_00001801.jpg'

]

input_name = compilation_session.get_inputs()[0].name

for path_sample in path_calibation_images:

calib_image = Image.open(str(path_sample)).convert('RGB').resize([512,512])

calib_image = np.array(calib_image).astype(np.uint8)

calib_image = np.expand_dims(calib_image, [0]).transpose([0,3,1,2])

calibration_output = list(compilation_session.run(None, {input_name : calib_image}))

# Inference TIDL

test_image = Image.open('./person_and_car.jpg').convert('RGB').resize([512,512])

test_image = np.array(test_image).astype(np.uint8)

test_image = np.expand_dims(test_image, [0]).transpose([0,3,1,2])

EP_list = ['TIDLExecutionProvider','CPUExecutionProvider']

inference_session_custom_script = rt.InferenceSession(str(active_model_path) ,providers=EP_list, provider_options=[delegate_options, {}], sess_options=so)

input_name = inference_session_custom_script.get_inputs()[0].name

inference_output_custom_script = list(inference_session_custom_script.run(None, {input_name : test_image}))[0]

processed_image_custom_script = np.squeeze(inference_output_custom_script)

processed_image_custom_script = Image.fromarray(processed_image_custom_script.astype(np.uint8))

processed_image_custom_script.save(str(output_dir / 'processed_image_custom_script.png'))

# Inference onnxrt_ep.py

ti_script_artifacts_path = Path('/home/root/edgeai-tidl-tools/model-artifacts/ss-ort-deeplabv3lite_mobilenetv2/')

ti_script_model_path = ti_script_artifacts_path / 'deeplabv3lite_mobilenetv2.onnx'

delegate_options_ti_script = delegate_options

delegate_options_ti_script['artifacts_folder'] = str(ti_script_artifacts_path)

EP_list = ['TIDLExecutionProvider','CPUExecutionProvider']

inference_session_ti_script = rt.InferenceSession(str(ti_script_model_path) ,providers=EP_list, provider_options=[delegate_options_ti_script, {}], sess_options=so)

input_name = inference_session_ti_script.get_inputs()[0].name

inference_output_ti_script = list(inference_session_ti_script.run(None, {input_name : test_image}))[0]

processed_image_ti_script = np.squeeze(inference_output_ti_script)

processed_image_ti_script = Image.fromarray(processed_image_ti_script.astype(np.uint8))

processed_image_ti_script.save(str(output_dir / 'processed_image_ti_script.png'))

# Inference ONNX

EP_list = ['CPUExecutionProvider']

inference_session_onnx = rt.InferenceSession(str(active_model_path) ,providers=EP_list, sess_options=so)

input_name = inference_session_onnx.get_inputs()[0].name

inference_output_onnx = list(inference_session_onnx.run(None, {input_name : test_image}))

processed_image_onnx = np.squeeze(inference_output_onnx)

processed_image_onnx = Image.fromarray(processed_image_onnx.astype(np.uint8))

processed_image_onnx.save(str(output_dir / 'processed_image_onnx.png'))

编译日志是:

root@7b296843265d:/home/root/ModelCompilation# python3 minimalConverter.py

tidl_tools_path = /home/root/edgeai-tidl-tools/tidl_tools

artifacts_folder = convertedModel/artifacts

tidl_tensor_bits = 8

debug_level = 1

num_tidl_subgraphs = 16

tidl_denylist =

tidl_denylist_layer_name =

tidl_denylist_layer_type =

model_type =

tidl_calibration_accuracy_level = 7

tidl_calibration_options:num_frames_calibration = 2

tidl_calibration_options:bias_calibration_iterations = 5

mixed_precision_factor = -1.000000

model_group_id = 0

power_of_2_quantization = 2

enable_high_resolution_optimization = 0

pre_batchnorm_fold = 1

add_data_convert_ops = 0

output_feature_16bit_names_list =

m_params_16bit_names_list =

reserved_compile_constraints_flag = 1601

ti_internal_reserved_1 =

****** WARNING : Network not identified as Object Detection network : (1) Ignore if network is not Object Detection network (2) If network is Object Detection network, please specify "model_type":"OD" as part of OSRT compilation options******

Supported TIDL layer type --- Cast --

Supported TIDL layer type --- Add --

Supported TIDL layer type --- Mul --

Supported TIDL layer type --- Conv -- encoder.features.0.0

Supported TIDL layer type --- Relu -- 369

Supported TIDL layer type --- Conv -- encoder.features.1.conv.0.0

Supported TIDL layer type --- Relu -- 372

Supported TIDL layer type --- Conv -- encoder.features.1.conv.1

Supported TIDL layer type --- Conv -- encoder.features.2.conv.0.0

Supported TIDL layer type --- Relu -- 377

Supported TIDL layer type --- Conv -- encoder.features.2.conv.1.0

Supported TIDL layer type --- Relu -- 380

Supported TIDL layer type --- Conv -- encoder.features.2.conv.2

Supported TIDL layer type --- Conv -- encoder.features.3.conv.0.0

Supported TIDL layer type --- Relu -- 385

Supported TIDL layer type --- Conv -- encoder.features.3.conv.1.0

Supported TIDL layer type --- Relu -- 388

Supported TIDL layer type --- Conv -- encoder.features.3.conv.2

Supported TIDL layer type --- Add -- 391

Supported TIDL layer type --- Conv -- encoder.features.4.conv.0.0

Supported TIDL layer type --- Relu -- 394

Supported TIDL layer type --- Conv -- encoder.features.4.conv.1.0

Supported TIDL layer type --- Relu -- 397

Supported TIDL layer type --- Conv -- encoder.features.4.conv.2

Supported TIDL layer type --- Conv -- encoder.features.5.conv.0.0

Supported TIDL layer type --- Relu -- 402

Supported TIDL layer type --- Conv -- encoder.features.5.conv.1.0

Supported TIDL layer type --- Relu -- 405

Supported TIDL layer type --- Conv -- encoder.features.5.conv.2

Supported TIDL layer type --- Add -- 408

Supported TIDL layer type --- Conv -- encoder.features.6.conv.0.0

Supported TIDL layer type --- Relu -- 411

Supported TIDL layer type --- Conv -- encoder.features.6.conv.1.0

Supported TIDL layer type --- Relu -- 414

Supported TIDL layer type --- Conv -- encoder.features.6.conv.2

Supported TIDL layer type --- Add -- 417

Supported TIDL layer type --- Conv -- encoder.features.7.conv.0.0

Supported TIDL layer type --- Relu -- 420

Supported TIDL layer type --- Conv -- encoder.features.7.conv.1.0

Supported TIDL layer type --- Relu -- 423

Supported TIDL layer type --- Conv -- encoder.features.7.conv.2

Supported TIDL layer type --- Conv -- encoder.features.8.conv.0.0

Supported TIDL layer type --- Relu -- 428

Supported TIDL layer type --- Conv -- encoder.features.8.conv.1.0

Supported TIDL layer type --- Relu -- 431

Supported TIDL layer type --- Conv -- encoder.features.8.conv.2

Supported TIDL layer type --- Add -- 434

Supported TIDL layer type --- Conv -- encoder.features.9.conv.0.0

Supported TIDL layer type --- Relu -- 437

Supported TIDL layer type --- Conv -- encoder.features.9.conv.1.0

Supported TIDL layer type --- Relu -- 440

Supported TIDL layer type --- Conv -- encoder.features.9.conv.2

Supported TIDL layer type --- Add -- 443

Supported TIDL layer type --- Conv -- encoder.features.10.conv.0.0

Supported TIDL layer type --- Relu -- 446

Supported TIDL layer type --- Conv -- encoder.features.10.conv.1.0

Supported TIDL layer type --- Relu -- 449

Supported TIDL layer type --- Conv -- encoder.features.10.conv.2

Supported TIDL layer type --- Add -- 452

Supported TIDL layer type --- Conv -- encoder.features.11.conv.0.0

Supported TIDL layer type --- Relu -- 455

Supported TIDL layer type --- Conv -- encoder.features.11.conv.1.0

Supported TIDL layer type --- Relu -- 458

Supported TIDL layer type --- Conv -- encoder.features.11.conv.2

Supported TIDL layer type --- Conv -- encoder.features.12.conv.0.0

Supported TIDL layer type --- Relu -- 463

Supported TIDL layer type --- Conv -- encoder.features.12.conv.1.0

Supported TIDL layer type --- Relu -- 466

Supported TIDL layer type --- Conv -- encoder.features.12.conv.2

Supported TIDL layer type --- Add -- 469

Supported TIDL layer type --- Conv -- encoder.features.13.conv.0.0

Supported TIDL layer type --- Relu -- 472

Supported TIDL layer type --- Conv -- encoder.features.13.conv.1.0

Supported TIDL layer type --- Relu -- 475

Supported TIDL layer type --- Conv -- encoder.features.13.conv.2

Supported TIDL layer type --- Add -- 478

Supported TIDL layer type --- Conv -- encoder.features.14.conv.0.0

Supported TIDL layer type --- Relu -- 481

Supported TIDL layer type --- Conv -- encoder.features.14.conv.1.0

Supported TIDL layer type --- Relu -- 484

Supported TIDL layer type --- Conv -- encoder.features.14.conv.2

Supported TIDL layer type --- Conv -- encoder.features.15.conv.0.0

Supported TIDL layer type --- Relu -- 489

Supported TIDL layer type --- Conv -- encoder.features.15.conv.1.0

Supported TIDL layer type --- Relu -- 492

Supported TIDL layer type --- Conv -- encoder.features.15.conv.2

Supported TIDL layer type --- Add -- 495

Supported TIDL layer type --- Conv -- encoder.features.16.conv.0.0

Supported TIDL layer type --- Relu -- 498

Supported TIDL layer type --- Conv -- encoder.features.16.conv.1.0

Supported TIDL layer type --- Relu -- 501

Supported TIDL layer type --- Conv -- encoder.features.16.conv.2

Supported TIDL layer type --- Add -- 504

Supported TIDL layer type --- Conv -- encoder.features.17.conv.0.0

Supported TIDL layer type --- Relu -- 507

Supported TIDL layer type --- Conv -- encoder.features.17.conv.1.0

Supported TIDL layer type --- Relu -- 510

Supported TIDL layer type --- Conv -- encoder.features.17.conv.2

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_bra3.0.0

Supported TIDL layer type --- Relu -- 533

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_bra3.1.0

Supported TIDL layer type --- Relu -- 536

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_bra2.0.0

Supported TIDL layer type --- Relu -- 527

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_bra2.1.0

Supported TIDL layer type --- Relu -- 530

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_bra1.0.0

Supported TIDL layer type --- Relu -- 521

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_bra1.1.0

Supported TIDL layer type --- Relu -- 524

Supported TIDL layer type --- Conv -- decoders.0.aspp.conv1x1.0

Supported TIDL layer type --- Relu -- 518

Supported TIDL layer type --- Concat -- 537

Supported TIDL layer type --- Conv -- decoders.0.aspp.aspp_out.0

Supported TIDL layer type --- Relu -- 540

Supported TIDL layer type --- Resize -- 571

Supported TIDL layer type --- Conv -- decoders.0.shortcut.0

Supported TIDL layer type --- Relu -- 515

Supported TIDL layer type --- Concat -- 516

Supported TIDL layer type --- Conv -- decoders.0.pred.0.0

Supported TIDL layer type --- Conv -- decoders.0.pred.1.0

Supported TIDL layer type --- Resize -- 576

Supported TIDL layer type --- ArgMax -- 565

Supported TIDL layer type --- Cast --

Preliminary subgraphs created = 1

Final number of subgraphs created are : 1, - Offloaded Nodes - 124, Total Nodes - 124

INFORMATION -- [TIDL_ResizeLayer] Any resize ratio which is power of 2 and greater than 4 will be placed by combination of 4x4 resize layer and 2x2 resize layer. For example a 8x8 resize will be replaced by 4x4 resize followed by 2x2 resize.

INFORMATION -- [TIDL_ResizeLayer] Any resize ratio which is power of 2 and greater than 4 will be placed by combination of 4x4 resize layer and 2x2 resize layer. For example a 8x8 resize will be replaced by 4x4 resize followed by 2x2 resize.

Running runtimes graphviz - /home/root/edgeai-tidl-tools/tidl_tools/tidl_graphVisualiser_runtimes.out convertedModel/artifacts/allowedNode.txt convertedModel/artifacts/tempDir/graphvizInfo.txt convertedModel/artifacts/tempDir/runtimes_visualization.svg

*** In TIDL_createStateImportFunc ***

Compute on node : TIDLExecutionProvider_TIDL_0_0

0, Cast, 1, 1, input.1Net_IN, TIDL_cast_in

1, Add, 2, 1, TIDL_cast_in, TIDL_Scale_In

2, Mul, 2, 1, TIDL_Scale_In, input.1

3, Conv, 3, 1, input.1, 369

4, Relu, 1, 1, 369, 370

5, Conv, 3, 1, 370, 372

6, Relu, 1, 1, 372, 373

7, Conv, 3, 1, 373, 375

8, Conv, 3, 1, 375, 377

9, Relu, 1, 1, 377, 378

10, Conv, 3, 1, 378, 380

11, Relu, 1, 1, 380, 381

12, Conv, 3, 1, 381, 383

13, Conv, 3, 1, 383, 385

14, Relu, 1, 1, 385, 386

15, Conv, 3, 1, 386, 388

16, Relu, 1, 1, 388, 389

17, Conv, 3, 1, 389, 391

18, Add, 2, 1, 383, 392

19, Conv, 3, 1, 392, 515

20, Relu, 1, 1, 515, 516

21, Conv, 3, 1, 392, 394

22, Relu, 1, 1, 394, 395

23, Conv, 3, 1, 395, 397

24, Relu, 1, 1, 397, 398

25, Conv, 3, 1, 398, 400

26, Conv, 3, 1, 400, 402

27, Relu, 1, 1, 402, 403

28, Conv, 3, 1, 403, 405

29, Relu, 1, 1, 405, 406

30, Conv, 3, 1, 406, 408

31, Add, 2, 1, 400, 409

32, Conv, 3, 1, 409, 411

33, Relu, 1, 1, 411, 412

34, Conv, 3, 1, 412, 414

35, Relu, 1, 1, 414, 415

36, Conv, 3, 1, 415, 417

37, Add, 2, 1, 409, 418

38, Conv, 3, 1, 418, 420

39, Relu, 1, 1, 420, 421

40, Conv, 3, 1, 421, 423

41, Relu, 1, 1, 423, 424

42, Conv, 3, 1, 424, 426

43, Conv, 3, 1, 426, 428

44, Relu, 1, 1, 428, 429

45, Conv, 3, 1, 429, 431

46, Relu, 1, 1, 431, 432

47, Conv, 3, 1, 432, 434

48, Add, 2, 1, 426, 435

49, Conv, 3, 1, 435, 437

50, Relu, 1, 1, 437, 438

51, Conv, 3, 1, 438, 440

52, Relu, 1, 1, 440, 441

53, Conv, 3, 1, 441, 443

54, Add, 2, 1, 435, 444

55, Conv, 3, 1, 444, 446

56, Relu, 1, 1, 446, 447

57, Conv, 3, 1, 447, 449

58, Relu, 1, 1, 449, 450

59, Conv, 3, 1, 450, 452

60, Add, 2, 1, 444, 453

61, Conv, 3, 1, 453, 455

62, Relu, 1, 1, 455, 456

63, Conv, 3, 1, 456, 458

64, Relu, 1, 1, 458, 459

65, Conv, 3, 1, 459, 461

66, Conv, 3, 1, 461, 463

67, Relu, 1, 1, 463, 464

68, Conv, 3, 1, 464, 466

69, Relu, 1, 1, 466, 467

70, Conv, 3, 1, 467, 469

71, Add, 2, 1, 461, 470

72, Conv, 3, 1, 470, 472

73, Relu, 1, 1, 472, 473

74, Conv, 3, 1, 473, 475

75, Relu, 1, 1, 475, 476

76, Conv, 3, 1, 476, 478

77, Add, 2, 1, 470, 479

78, Conv, 3, 1, 479, 481

79, Relu, 1, 1, 481, 482

80, Conv, 3, 1, 482, 484

81, Relu, 1, 1, 484, 485

82, Conv, 3, 1, 485, 487

83, Conv, 3, 1, 487, 489

84, Relu, 1, 1, 489, 490

85, Conv, 3, 1, 490, 492

86, Relu, 1, 1, 492, 493

87, Conv, 3, 1, 493, 495

88, Add, 2, 1, 487, 496

89, Conv, 3, 1, 496, 498

90, Relu, 1, 1, 498, 499

91, Conv, 3, 1, 499, 501

92, Relu, 1, 1, 501, 502

93, Conv, 3, 1, 502, 504

94, Add, 2, 1, 496, 505

95, Conv, 3, 1, 505, 507

96, Relu, 1, 1, 507, 508

97, Conv, 3, 1, 508, 510

98, Relu, 1, 1, 510, 511

99, Conv, 3, 1, 511, 513

100, Conv, 3, 1, 513, 518

101, Relu, 1, 1, 518, 519

102, Conv, 3, 1, 513, 521

103, Relu, 1, 1, 521, 522

104, Conv, 3, 1, 522, 524

105, Relu, 1, 1, 524, 525

106, Conv, 3, 1, 513, 527

107, Relu, 1, 1, 527, 528

108, Conv, 3, 1, 528, 530

109, Relu, 1, 1, 530, 531

110, Conv, 3, 1, 513, 533

111, Relu, 1, 1, 533, 534

112, Conv, 3, 1, 534, 536

113, Relu, 1, 1, 536, 537

114, Concat, 4, 1, 519, 538

115, Conv, 3, 1, 538, 540

116, Relu, 1, 1, 540, 541

117, Resize, 3, 1, 541, 551

118, Concat, 2, 1, 551, 552

119, Conv, 3, 1, 552, 554

120, Conv, 2, 1, 554, 555

121, Resize, 3, 1, 555, 565

122, ArgMax, 1, 1, 565, 566

123, Cast, 1, 1, 566, 566TIDL_cast_out

Input tensor name - input.1Net_IN

Output tensor name - 566TIDL_cast_out

In TIDL_onnxRtImportInit subgraph_name=566TIDL_cast_out

Layer 0, subgraph id 566TIDL_cast_out, name=566TIDL_cast_out

Layer 1, subgraph id 566TIDL_cast_out, name=input.1Net_IN

In TIDL_runtimesOptimizeNet: LayerIndex = 126, dataIndex = 125

************** Frame index 1 : Running float import *************

In TIDL_runtimesPostProcessNet

INFORMATION: [TIDL_ResizeLayer] 571 Any resize ratio which is power of 2 and greater than 4 will be placed by combination of 4x4 resize layer and 2x2 resize layer. For example a 8x8 resize will be replaced by 4x4 resize followed by 2x2 resize.

INFORMATION: [TIDL_ResizeLayer] 576 Any resize ratio which is power of 2 and greater than 4 will be placed by combination of 4x4 resize layer and 2x2 resize layer. For example a 8x8 resize will be replaced by 4x4 resize followed by 2x2 resize.

WARNING: [TIDL_E_DATAFLOW_INFO_NULL] Network compiler returned with error or didn't executed, this model can only be used on PC/Host emulation mode, it is not expected to work on target/EVM.

****************************************************

** 3 WARNINGS 0 ERRORS **

****************************************************

************ in TIDL_subgraphRtCreate ************

The soft limit is 2048

The hard limit is 2048

MEM: Init ... !!!

MEM: Init ... Done !!!

0.0s: VX_ZONE_INIT:Enabled

0.16s: VX_ZONE_ERROR:Enabled

0.19s: VX_ZONE_WARNING:Enabled

0.3255s: VX_ZONE_INIT:[tivxInit:184] Initialization Done !!!

************ TIDL_subgraphRtCreate done ************

******* In TIDL_subgraphRtInvoke ********

Layer, Layer Cycles,kernelOnlyCycles, coreLoopCycles,LayerSetupCycles,dmaPipeupCycles, dmaPipeDownCycles, PrefetchCycles,copyKerCoeffCycles,LayerDeinitCycles,LastBlockCycles, paddingTrigger, paddingWait,LayerWithoutPad,LayerHandleCopy, BackupCycles, RestoreCycles,

1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0,

...

Sum of Layer Cycles 0

Sub Graph Stats 590.000000 7308169.000000 1098.000000

******* TIDL_subgraphRtInvoke done ********

********** Frame Index 1 : Running float inference **********

******* In TIDL_subgraphRtInvoke ********

Layer, Layer Cycles,kernelOnlyCycles, coreLoopCycles,LayerSetupCycles,dmaPipeupCycles, dmaPipeDownCycles, PrefetchCycles,copyKerCoeffCycles,LayerDeinitCycles,LastBlockCycles, paddingTrigger, paddingWait,LayerWithoutPad,LayerHandleCopy, BackupCycles, RestoreCycles,

1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0,

...

Sum of Layer Cycles 0

Sub Graph Stats 339.000000 8012666.000000 5055.000000

******* TIDL_subgraphRtInvoke done ********

********** Frame Index 2 : Running fixed point mode for calibration **********

In TIDL_runtimesPostProcessNet

~~~~~Running TIDL in PC emulation mode to collect Activations range for each layer~~~~~

Processing config file #0 : /home/root/ModelCompilation/convertedModel/artifacts/tempDir/566TIDL_cast_out_tidl_io_.qunat_stats_config.txt

----------------------- TIDL Process with REF_ONLY FLOW ------------------------

# 0 . .. T 9205.90 .... ..... ... .... .....

# 1 . .. T 8825.61 .... ..... ... .... .....

~~~~~Running TIDL in PC emulation mode to collect Activations range for each layer~~~~~

Processing config file #0 : /home/root/ModelCompilation/convertedModel/artifacts/tempDir/566TIDL_cast_out_tidl_io_.qunat_stats_config.txt

----------------------- TIDL Process with REF_ONLY FLOW ------------------------

# 0 . .. T 6959.97 .... ..... ... .... .....

# 1 . .. T 6545.05 .... ..... ... .... .....

***************** Calibration iteration number 0 completed ************************

~~~~~Running TIDL in PC emulation mode to collect Activations range for each layer~~~~~

Processing config file #0 : /home/root/ModelCompilation/convertedModel/artifacts/tempDir/566TIDL_cast_out_tidl_io_.qunat_stats_config.txt

----------------------- TIDL Process with REF_ONLY FLOW ------------------------

# 0 . .. T 7138.41 .... ..... ... .... .....

# 1 . .. T 7795.76 .... ..... ... .... .....

***************** Calibration iteration number 1 completed ************************

~~~~~Running TIDL in PC emulation mode to collect Activations range for each layer~~~~~

Processing config file #0 : /home/root/ModelCompilation/convertedModel/artifacts/tempDir/566TIDL_cast_out_tidl_io_.qunat_stats_config.txt

----------------------- TIDL Process with REF_ONLY FLOW ------------------------

# 0 . .. T 8064.94 .... ..... ... .... .....

# 1 . .. T 7933.37 .... ..... ... .... .....

***************** Calibration iteration number 2 completed ************************

~~~~~Running TIDL in PC emulation mode to collect Activations range for each layer~~~~~

Processing config file #0 : /home/root/ModelCompilation/convertedModel/artifacts/tempDir/566TIDL_cast_out_tidl_io_.qunat_stats_config.txt

----------------------- TIDL Process with REF_ONLY FLOW ------------------------

# 0 . .. T 6983.47 .... ..... ... .... .....

# 1 . .. T 7395.72 .... ..... ... .... .....

***************** Calibration iteration number 3 completed ************************

~~~~~Running TIDL in PC emulation mode to collect Activations range for each layer~~~~~

Processing config file #0 : /home/root/ModelCompilation/convertedModel/artifacts/tempDir/566TIDL_cast_out_tidl_io_.qunat_stats_config.txt

----------------------- TIDL Process with REF_ONLY FLOW ------------------------

# 0 . .. T 6909.32 .... ..... ... .... .....

# 1 . .. T 7225.14 .... ..... ... .... .....

***************** Calibration iteration number 4 completed ************************

------------------ Network Compiler Traces -----------------------------

successful Memory allocation

INFORMATION: [TIDL_ResizeLayer] 571 Any resize ratio which is power of 2 and greater than 4 will be placed by combination of 4x4 resize layer and 2x2 resize layer. For example a 8x8 resize will be replaced by 4x4 resize followed by 2x2 resize.

INFORMATION: [TIDL_ResizeLayer] 576 Any resize ratio which is power of 2 and greater than 4 will be placed by combination of 4x4 resize layer and 2x2 resize layer. For example a 8x8 resize will be replaced by 4x4 resize followed by 2x2 resize.

****************************************************

** 2 WARNINGS 0 ERRORS **

****************************************************

libtidl_onnxrt_EP loaded 0x6bff990

artifacts_folder = convertedModel/artifacts

debug_level = 1

target_priority = 0

max_pre_empt_delay = 340282346638528859811704183484516925440.000000

Final number of subgraphs created are : 1, - Offloaded Nodes - 124, Total Nodes - 124

In TIDL_createStateInfer

Compute on node : TIDLExecutionProvider_TIDL_0_0

************ in TIDL_subgraphRtCreate ************

************ TIDL_subgraphRtCreate done ************

******* In TIDL_subgraphRtInvoke ********

Layer, Layer Cycles,kernelOnlyCycles, coreLoopCycles,LayerSetupCycles,dmaPipeupCycles, dmaPipeDownCycles, PrefetchCycles,copyKerCoeffCycles,LayerDeinitCycles,LastBlockCycles, paddingTrigger, paddingWait,LayerWithoutPad,LayerHandleCopy, BackupCycles, RestoreCycles,

1, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0,

...

Sum of Layer Cycles 0

Sub Graph Stats 170.000000 1235536.000000 822.000000

******* TIDL_subgraphRtInvoke done ********

************ in TIDL_subgraphRtDelete ************

************ in TIDL_subgraphRtDelete ************

MEM: Deinit ... !!!

MEM: Alloc's: 54 alloc's of 782360197 bytes

MEM: Free's : 54 free's of 782360197 bytes

MEM: Open's : 0 allocs of 0 bytes

MEM: Deinit ... Done !!!

我的问题是:为什么与示例脚本相比,模型编译在我的自定义脚本上的表现不一样好?

PS:警告"警告:[TIDL_E_dataflow_info_NULL]网络编译器返回错误或未执行、此模型只能用于 PC/主机仿真模式、预计不能在目标/EVM 上工作。

"也是由 onnxrt_ep.py 脚本激发的、但生成的模型在 SK-TDA4VM 上仍呈现~10ms 的运行时。