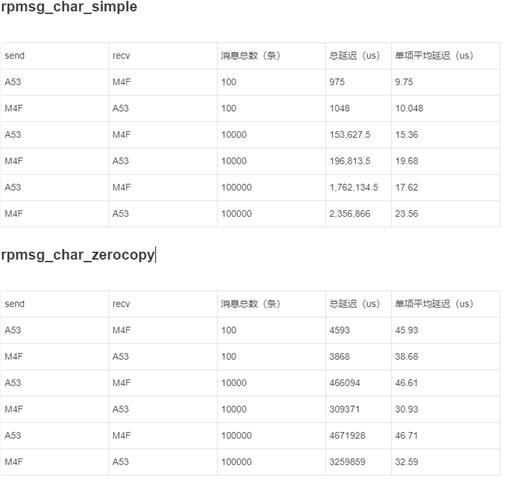

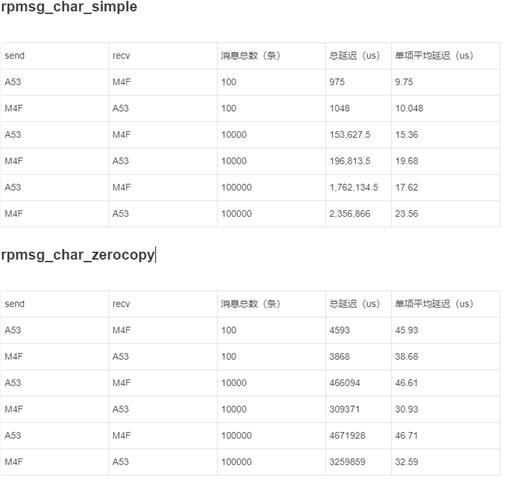

我想要知道这两个核间通信示例,哪一个传输数据的效率更快一些,我的测试结果如下所示,rpmsg_char_simple的结果与官方文档给出的结果类似。

rpmsg_char_zerocopy的方式比速度上要比rpmsg_char_simple慢,这个结论是否正确?

为什么rpmsg_char_zerocopy的方式会慢一点

This thread has been locked.

If you have a related question, please click the "Ask a related question" button in the top right corner. The newly created question will be automatically linked to this question.

我想要知道这两个核间通信示例,哪一个传输数据的效率更快一些,我的测试结果如下所示,rpmsg_char_simple的结果与官方文档给出的结果类似。

rpmsg_char_zerocopy的方式比速度上要比rpmsg_char_simple慢,这个结论是否正确?

为什么rpmsg_char_zerocopy的方式会慢一点

我的测试结果如下所示,rpmsg_char_simple的结果与官方文档给出的结果类似。

请问是怎么测试的?官方文档是哪个?

感谢您对TI产品的关注!由于问题比较复杂,已将您的问题发布在E2E英文技术论坛上,由资深的英文论坛工程师为您提供帮助。 您也可以点击下帖链接了解进展:

https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1330423/am625-rpmsg_char_zerocopy-performance

请按照下面工程师建议的方法,测试一下两个demo传输数据所花的时间。

工程师给出了详细的解释。

The output numbers the customer provided do not match the code snippet that you attached. I am not sure how they generated their numbers, so I cannot tell you exactly what is going on.

Does the code snippet from rpmsg_char_zerocopy actually measure latency? No.

t measures the time it takes to execute function send_msg. It does NOT measure the time between when Linux sends the RPMsg, and the time when the remote core receives and processes the RPMsg.

t2 measures the time it takes for (the remote core to receive the RPMsg, process the RPMsg, execute all the other code it wants to execute, rewrite the shared memory, send an RPMsg to Linux, have Linux receive and process the RPMsg) minus (the time it takes to go through all the print statements on the Linux side).

Neither t, nor t2, actually measures RPMsg latency.

Ok, so what could the customer do if they DO want to benchmark the zerocopy example?

The whole point of the zerocopy example is to show how to move large amounts of data between cores. This is NOT an example of how to minimize latency. It is an example of how to maximize THROUGHPUT.

If I were a customer benchmarking the zerocopy example, I would compare code like this:

rpmsg_char_simple:

Why else would I want to use the zerocopy example?

One usecase I have seen is when customers are trying to send data 496 bytes at a time through RPMsg in a single direction, they can reach an overflow situation where the sending core is sending data faster than the receiving core can keep up. Eventually they start losing data.

Instead of interrupting the receiving core for every 496 bytes of data, a shared memory example allows the sending core to send fewer interrupts to transmit the same amount of data. That can help the receiving core run more efficiently, since it is interrupted less, so it has to context switch less.

What if I am trying to minimize latency between Linux and a remote core?

First of all, make sure that you ACTUALLY want Linux to be in a critical control path where low, deterministic latency is required. Even RT Linux is NOT a true real-time OS, so there is always the risk that Linux will miss timing eventually. Refer here for more details: https://e2e.ti.com/support/processors-group/processors/f/processors-forum/1085663/faq-sitara-multicore-system-design-how-to-ensure-computations-occur-within-a-set-cycle-time

Additionally, Linux RPMsg is NOT currently designed to be deterministic. So the average latency may be on the order of tens of microseconds to 100 microseconds, but the worst-case latency CAN rarely spike up to 1ms or more in Linux kernel 6.1 and earlier.

RPMsg is the TI-supported IPC between Linux and a remote core. If you do not actually need to send 496 bytes of data with each message, you could implement your own IPC, like something that just used mailboxes. Keep in mind that TI does NOT provide support for mailbox communication between Linux userspace and remote cores. If the customer decides to develop their own mailbox IPC, we will NOT be able to support that development.