I have try these two model

https://github.com/TexasInstruments/edgeai-yolov5/tree/master/pretrained_models/models/yolov5s6_640_ti_lite/weights/yolov5s6_640_ti_lite_37p4_56p0.onnx

https://github.com/TexasInstruments/edgeai-yolov5/tree/master/pretrained_models/models/yolov5s6_640_ti_lite/weights/best.pt

Convert best.pt to best.onnx using the command below: python export.py --weights pretrained_models/yolov5s6_640_ti_lite/weights/best.pt --img 640 --batch 1 --simplify --export-nms --opset 11

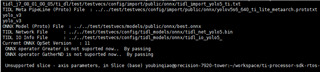

and when I used the file ./out/tidl_model_import.out to compile yolov5s6_640_ti_lite_37p4_56p0.onnx and best.onnx to bin file, I found yolov5s6_640_ti_lite_37p4_56p0.onnx can be complied successfully and best.onnx was failed. The picture below is the error log.

My question is, if I want to train my datasheet, and run the model on TDA4, then I need the pt file that can be export to onnx, and the onnx file can be complied to bin file successfully. Where is the pt file located?