Hi ti

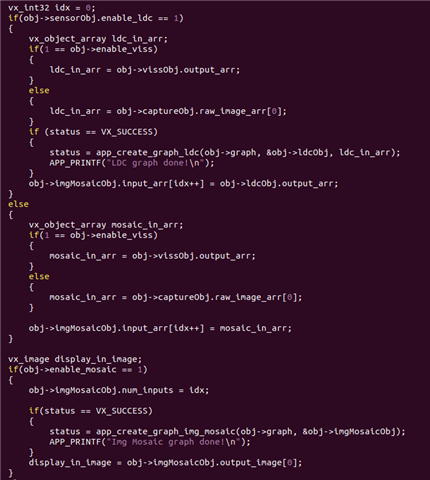

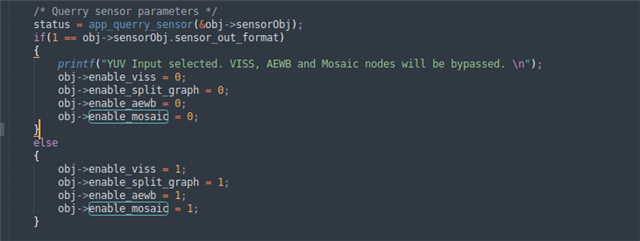

我们使用tda4vh 8.6sdk,使用mosaic节点拼接四路1920*1080的摄像头到3840x2160,在拼接的过程中出现异常如下,如何解决,谢谢

[MCU2_0] 76.678657 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.711825 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.745147 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.778350 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.811661 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.844832 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.878109 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.911438 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.944598 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 76.977914 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.011137 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.044405 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.077614 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.110888 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.144195 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.177410 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.210707 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.243887 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.277194 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.310391 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.343669 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.376967 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.410175 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.443499 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.476675 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.509961 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.543172 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.576462 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.609755 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.642964 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.676259 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request

[MCU2_0] 77.709430 s: VX_ZONE_ERROR:[tivxKernelImgMosaicMscDrvSubmit:1074] Failed to Submit Request